I was lucky to join a project with a vast scope and impact on the real world - a SaaS platform for IoT devices.

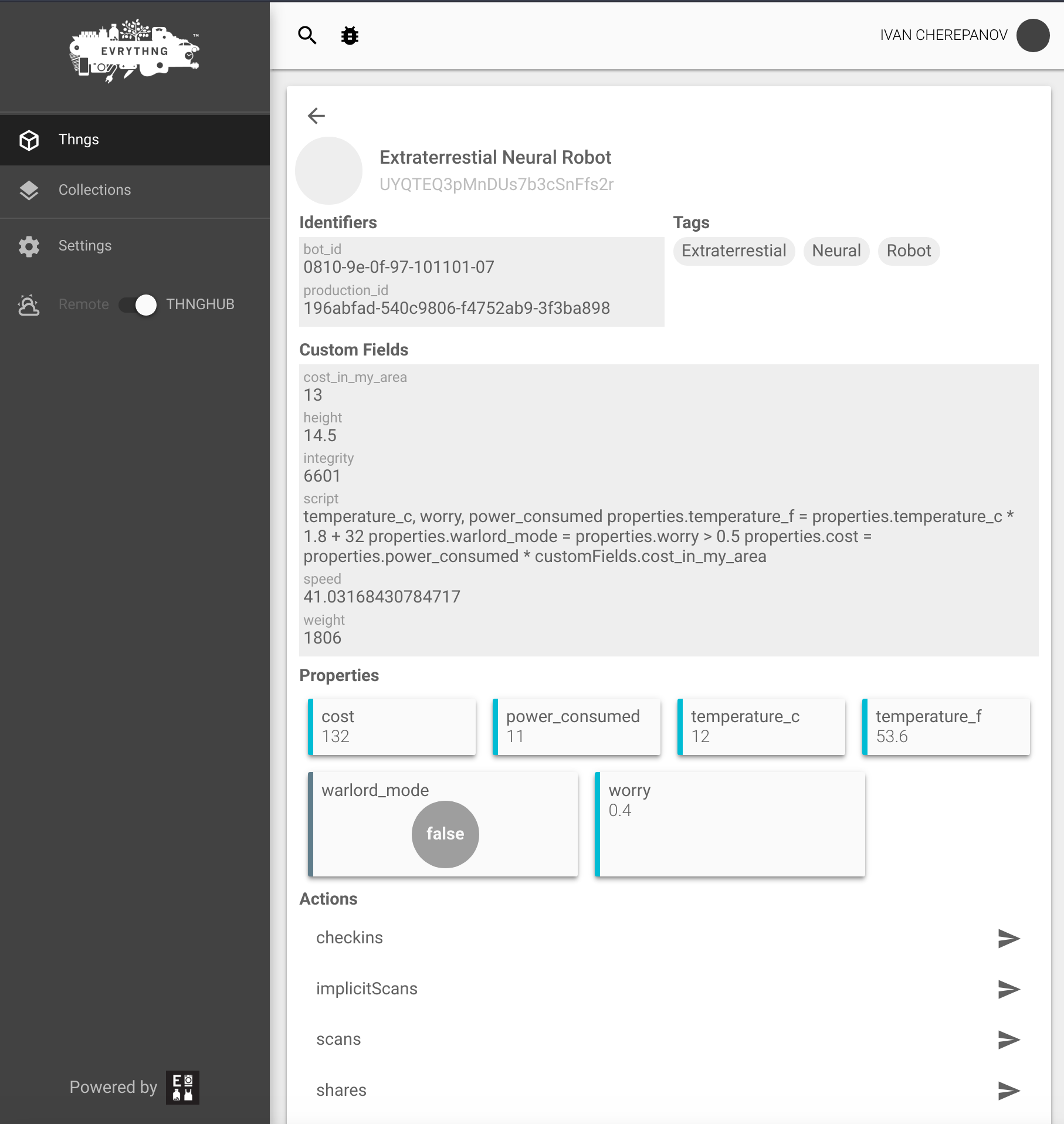

Initially, it wasn't focused on any particular industry area, allowing any smart device with access to the Internet to persist its data and communicate in real-time with other such devices.

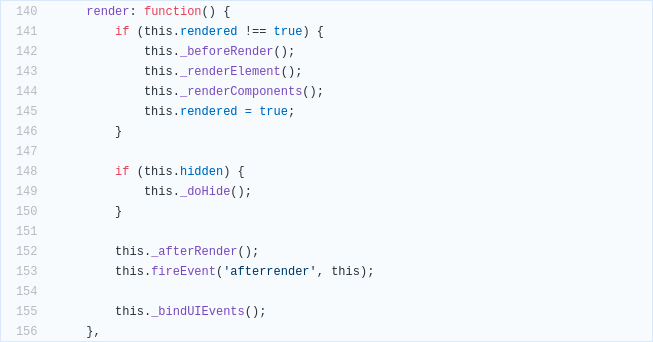

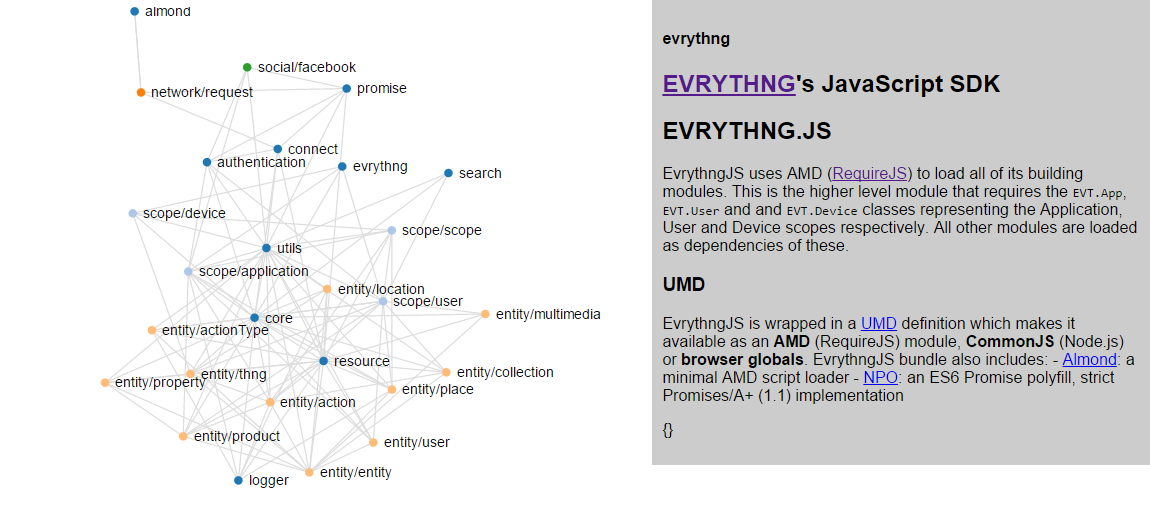

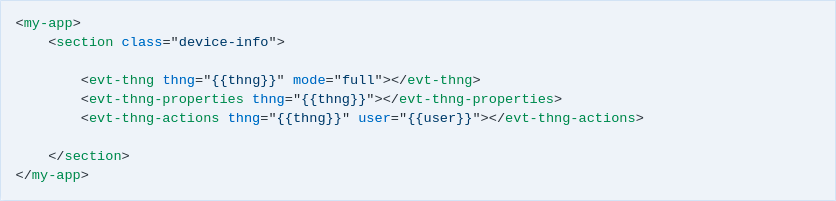

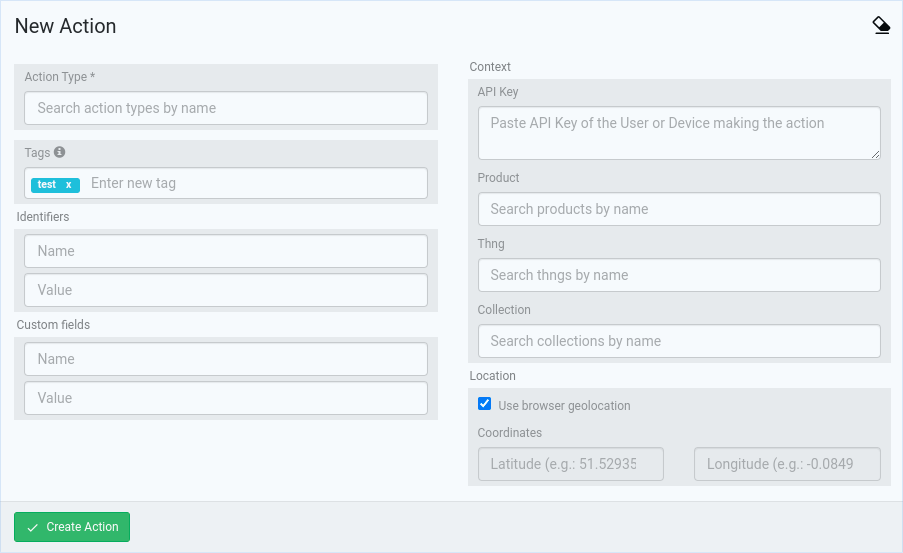

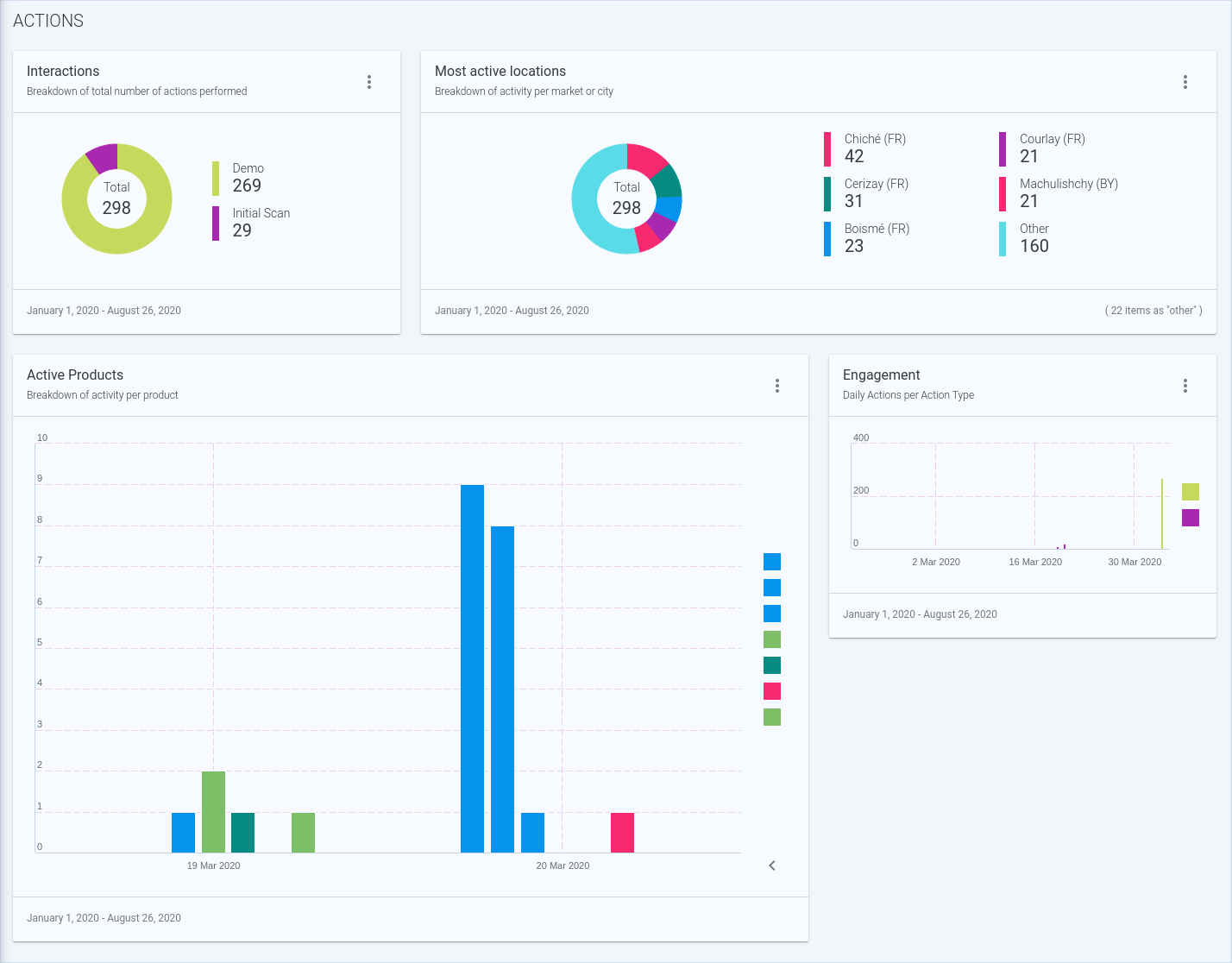

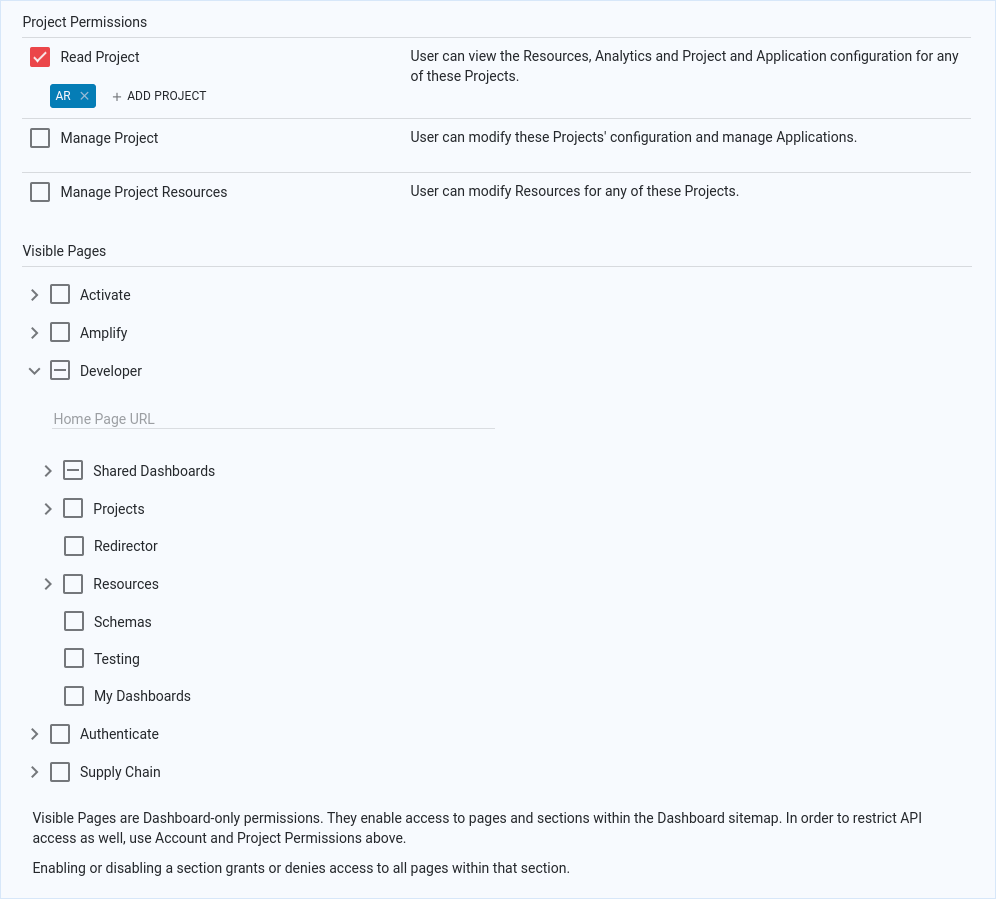

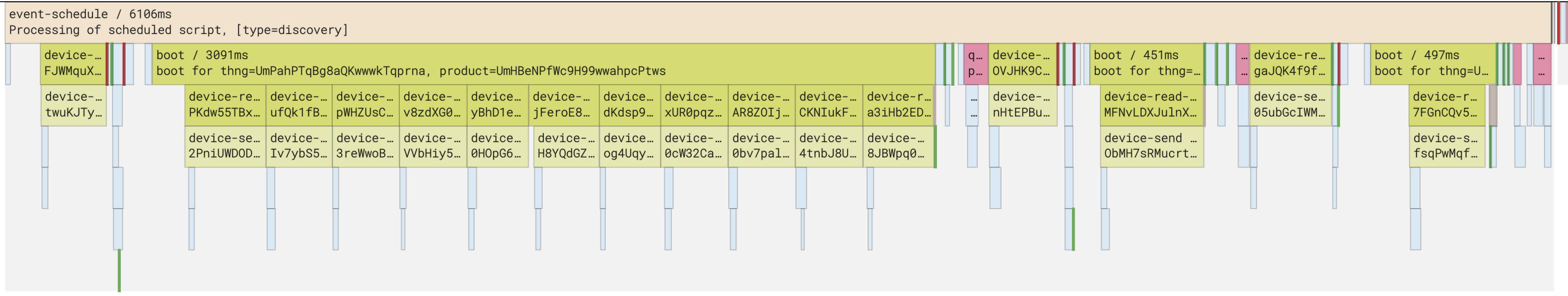

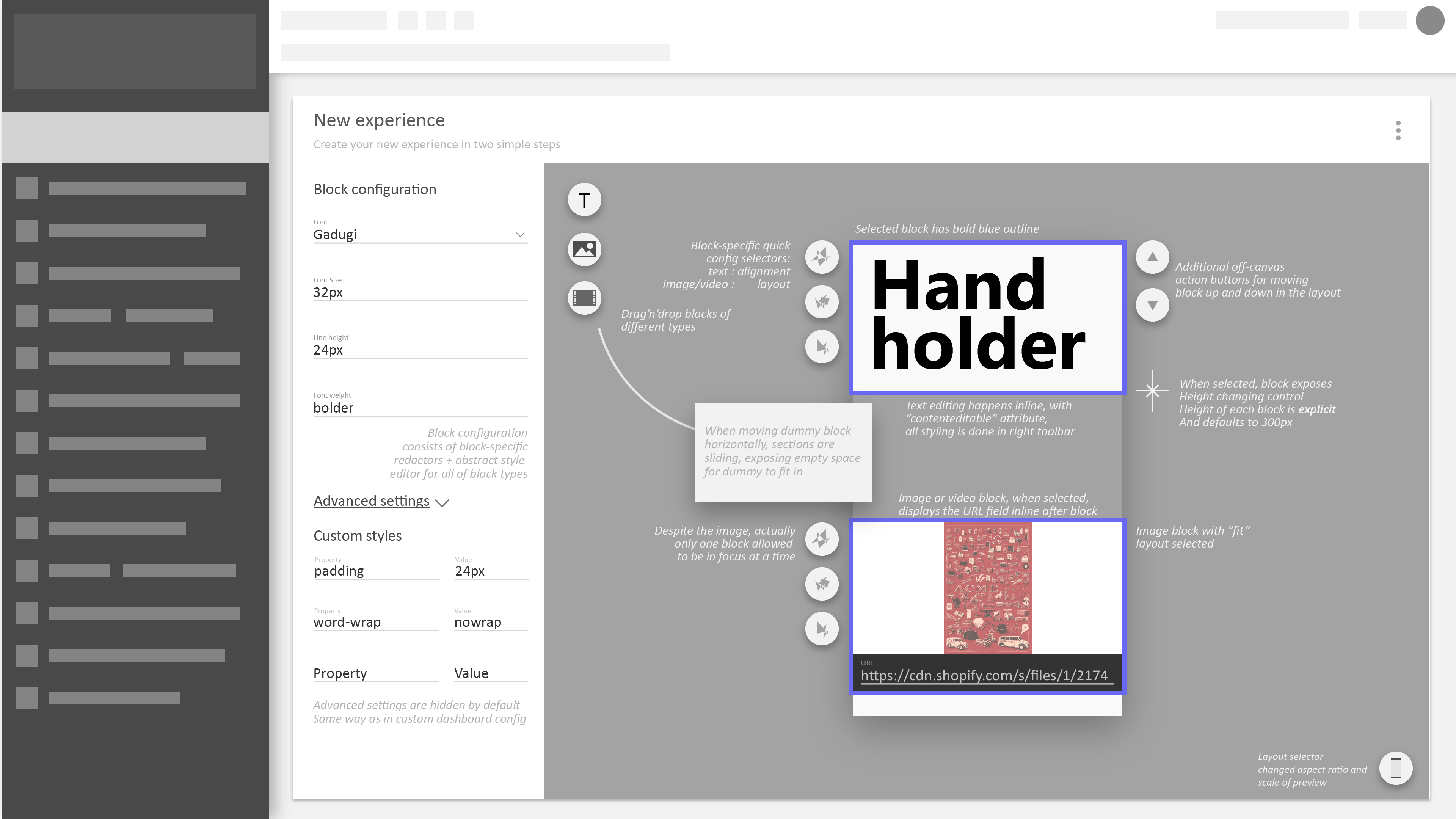

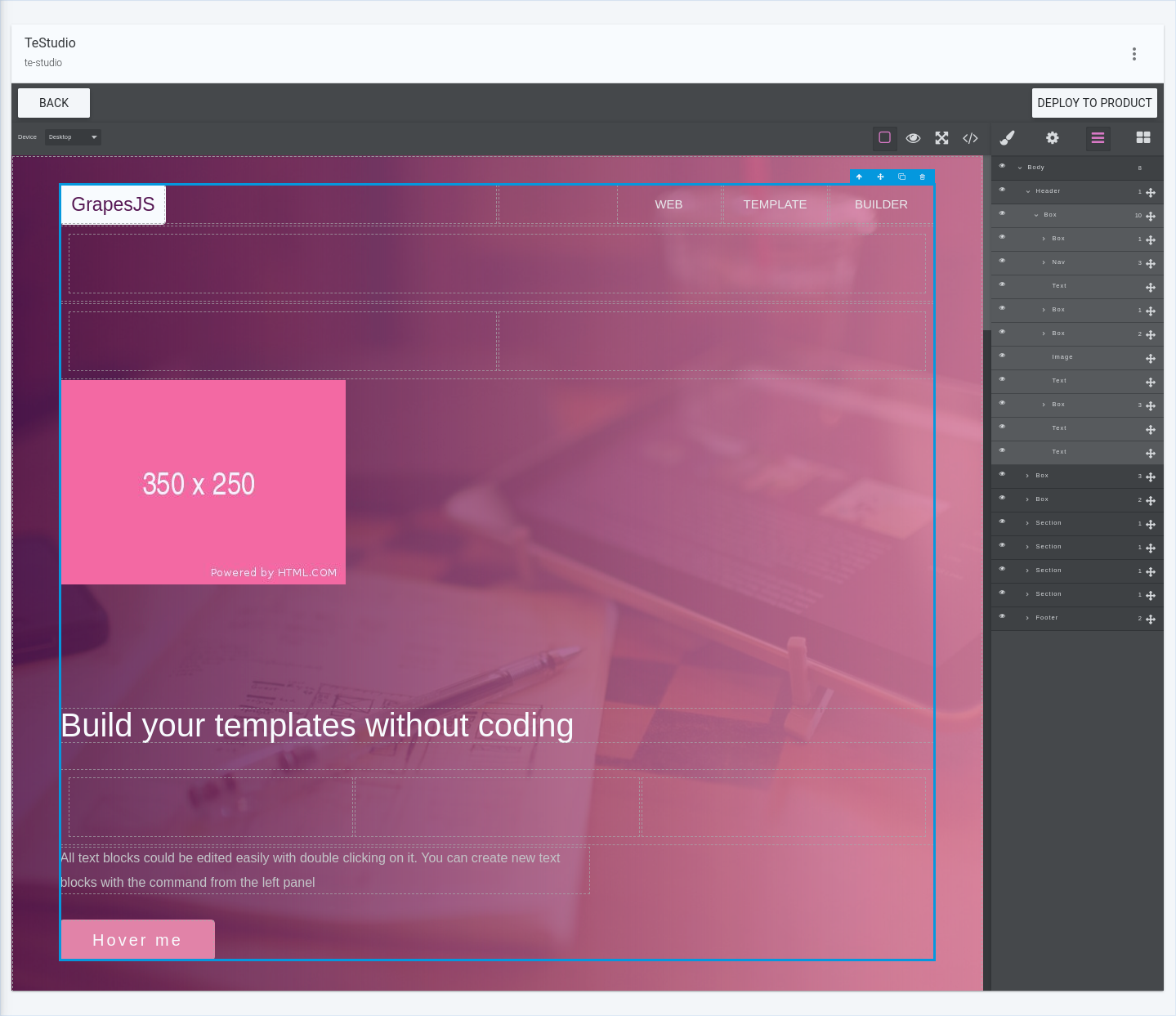

Platform itself was extremely feature-rich, including SDKs for building device firmware and client applications, highly complex rule engine to process incoming events, abstract data model allowing to represent anything from a T-Shirt to a Smart Water Heater, rich analytics over collected data, geolocation for incoming events, image recognition, real-time pub/sub for most of the entities and events, fully customizable dashboards (akin to AWS Cloud Watch) and much much more.

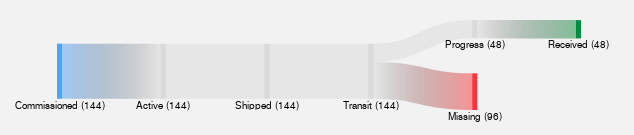

During the years I worked with most of the components in the Platform, applying them for vastly different use-cases, ranging from a simple page for a digitized product to a fully-fledged Warehouse Management and Analytics built on top of the main Platform.

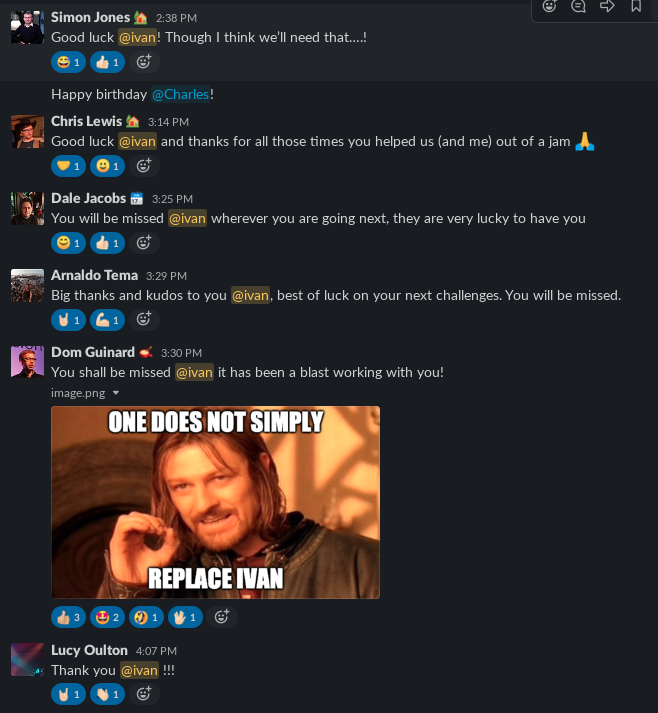

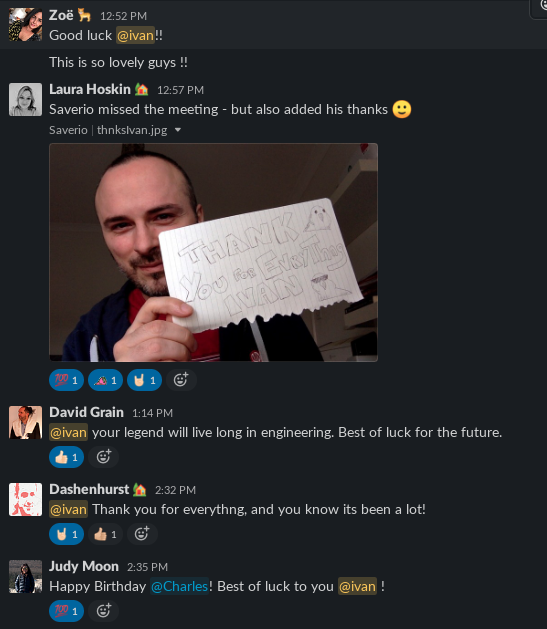

The last day in the Company is something I still warmly remember.

Later, company made an accent on the Tagged Products use-cases, collaborating with world-leading brands in digitizing their products and their lifecycle in the supply chain.